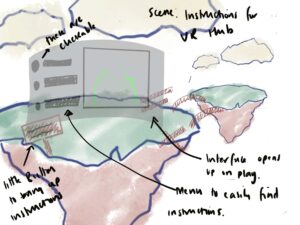

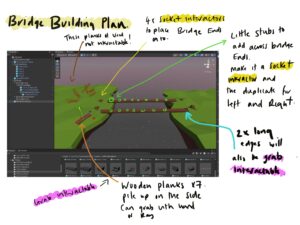

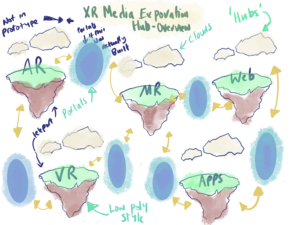

Week 8: Exploring Mixed Reality

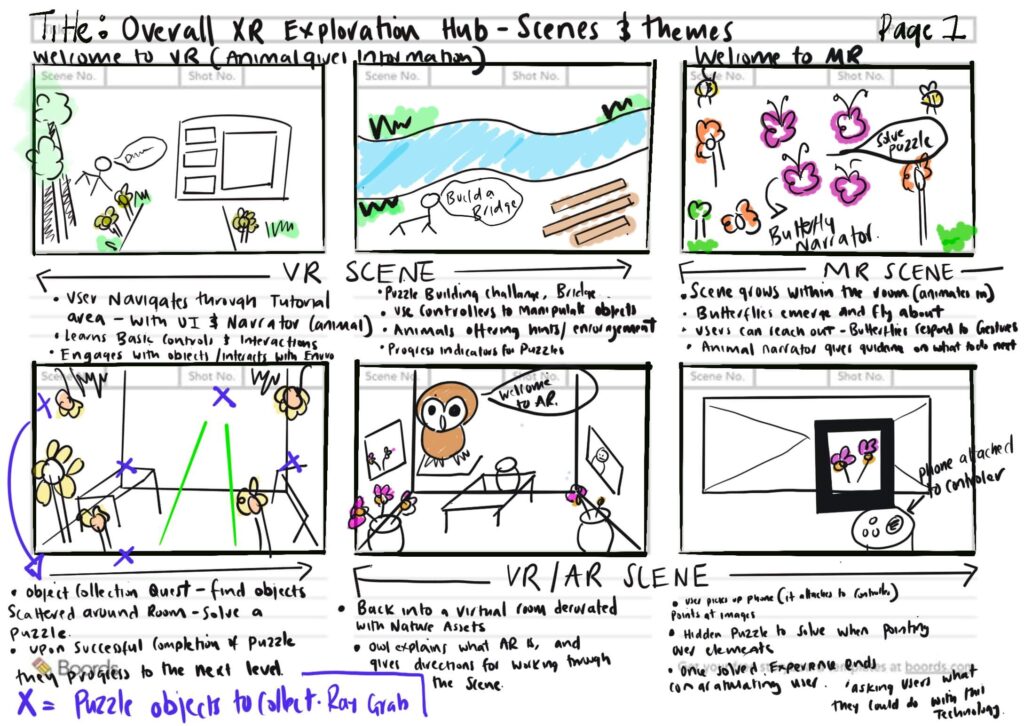

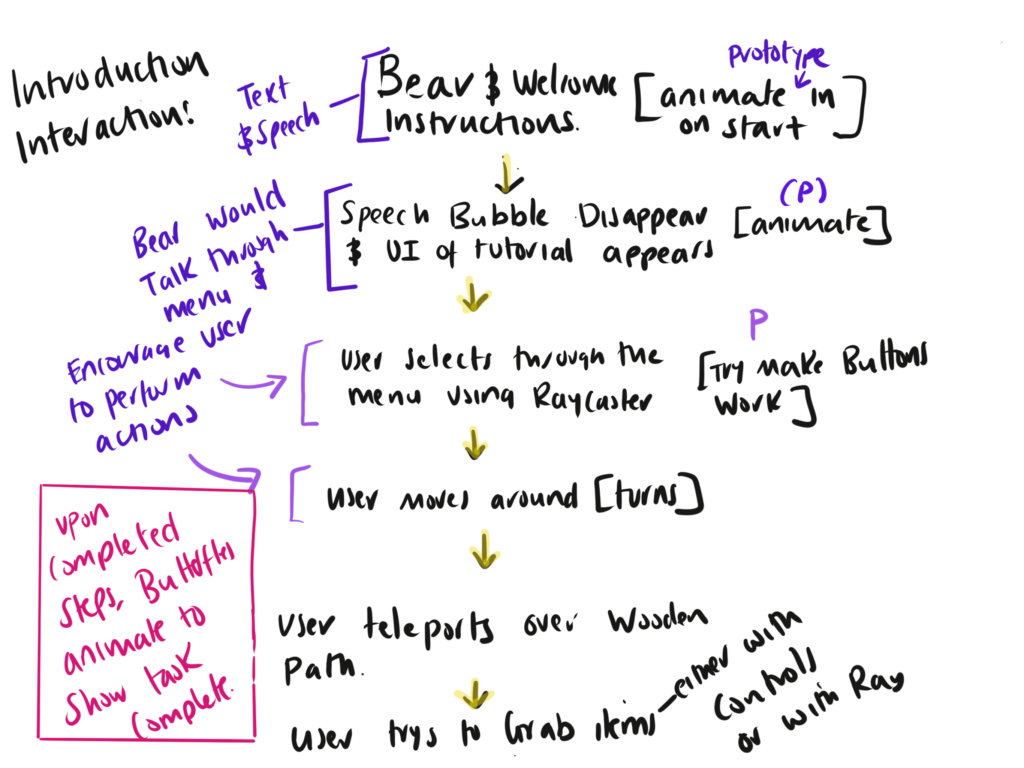

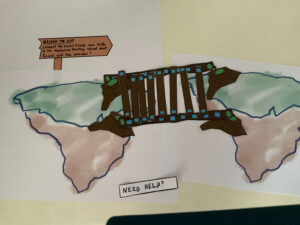

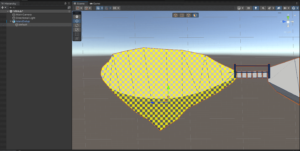

The primary objective of this assignment is to challenge you to propose and prototype a Mixed Reality (MR) application, leveraging the convergence of virtual and augmented elements. This task encourages you to think beyond traditional boundaries, utilizing any necessary tools to effectively communicate your MR concepts and showcase a seamless integration of digital and physical worlds