The primary objective of this assignment is to challenge you to propose and prototype a Mixed Reality (MR) application, leveraging the convergence of virtual and augmented elements. This task encourages you to think beyond traditional boundaries, utilizing any necessary tools to effectively communicate your MR concepts and showcase a seamless integration of digital and physical worlds

Designing for Spatial with Apple

This week began with watching the Apple Worldwide Developers Conference presentations: Design for Spatial User Interfaces (Apple, 2023a) and Principles of Spatial Design (Apple, 2023b). Seeing how the Apple design team changed their apps for spatial situations was inspiring. The world around us has become an endless canvas for creativity, complete with depth, scale, natural inputs, and spatial sounds. Whether we’re developing something completely new or adding crucial moments to existing apps, it’s exciting to consider how we may craft previously unthinkable experiences.

Although the design system and its components were created for visionOS, they provide a framework for developing spatial experiences on any platform.

Key Points for spatial design on miro

Last of the weekly assignments, then on to the final project!

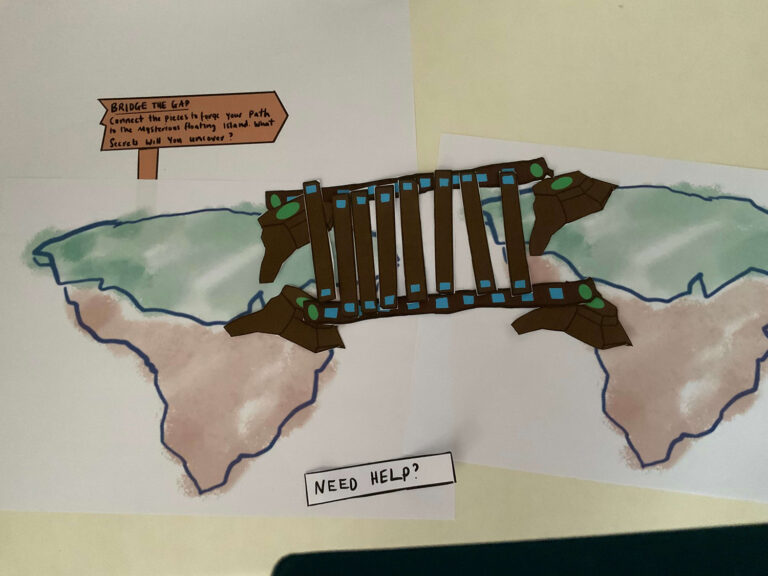

Reflecting on the past seven weeks, working with Unity has been challenging but rewarding as I learn more about the software’s features and functionality and get things to work! This week, my initial plan was to create a prototype using Shapes XR to bridge the gap between traditional academic materials and immersive learning experiences by creating a resource tailored for creative practice students that would assist in learning how to write a case study. This has always been a problematic module to teach!

After attending Thursday’s workshop and watching our instructor demonstrate Meta’s all-in-one XR SDK, I attempted to jump the Shapes XR ship and create this week’s assignment in Unity. Looking back on my weekly assignments, I have always gravitated towards the research and conceptualisation stages, as I enjoy design research and how it can fuel creative ideas. This week, I focused on learning to use the Meta SDK, which will later give me ideas for creating my spatial case study experience. I am always a little apprehensive about launching straight into software in case I cannot visualise my ideas [I have now realised this is a pattern for all my practical work; I just need to get stuck in!].

To further my understanding of the interactive components of a mixed-reality experience, I attempted to create a simple ball sorting game [inspired by this week’s learning materials]. Coloured balls would scatter around the room space, and the user can collect the balls and sort them into coloured boxes.

Back into Unity!

Meta's all-in-one XR SDK

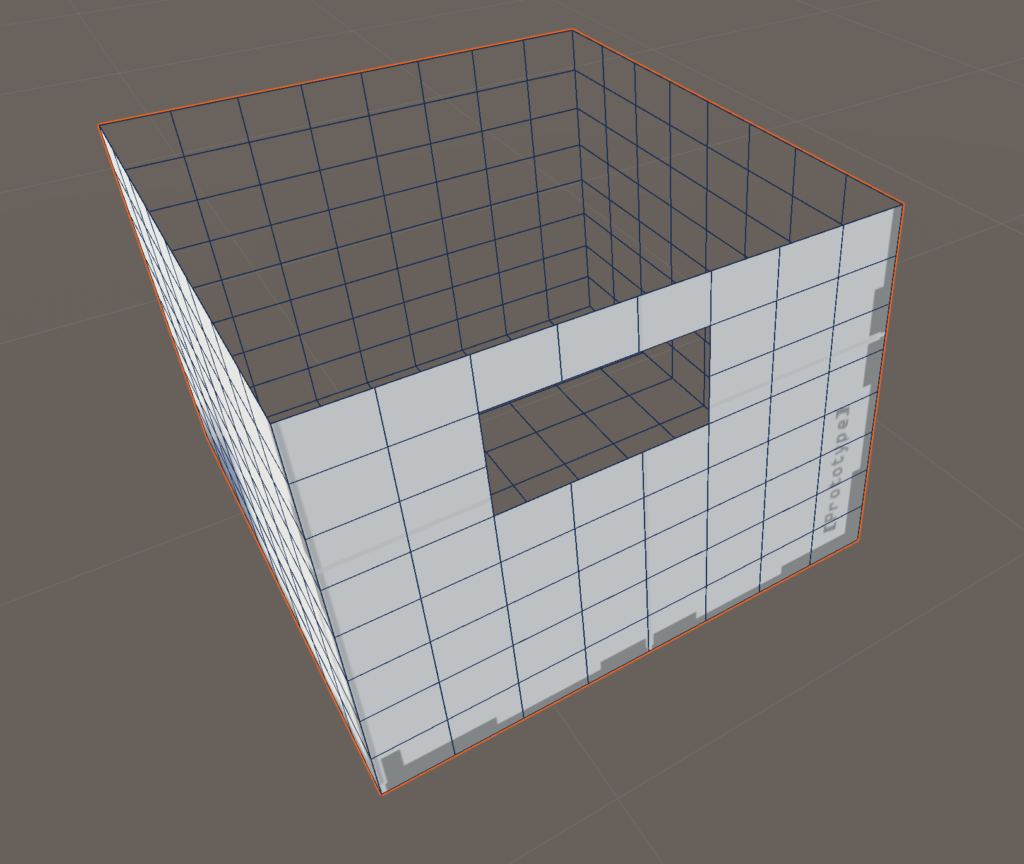

Next, I created a project using Meta’s all-in-one XR SDK to explore the building blocks and determine how to use them to make objects interactable, which was real easy to do, once I understood how!

To start building the assets, I imported ProBuilder to create boxes for the user to sort the balls into. I used a cube, deleted the top, subdivided the surfaces, and created handleholes. I made it interactable and assigned it a blue material. However, something did not look right in the editor; the box did not render correctly when testing in the headset.

As I was building the box, I felt I was not creating it correctly. Then, I remembered what we covered in a previous session: selecting edges, subdividing them, and extruding them with the ProBuilder Editor. I now had my interacable box and created a prefab to duplicate it to make different colours.

The next task was to make a ball from a sphere and add the interactions needed to make another prefab. All was going well until I tested my scene. I could not prevent the ball from falling through the floor. I spent considerable time working out the problem and even trying to recreate the project following the ball shooting tutorial using the deprecated Oculus package. Still, nothing has worked, leaving me feeling somewhat defeated on this task. I wanted to explore building on from Valem’s XR tutorial to see if I could spawn different colours of the balls from the controller and also, upon opening the app, have the boxes spread around the room.

A small win is that Unity is becoming more familiar!